|

|

Real or Fake 3D?

BinaryVision renders real 3D

"While film studios are cashing

in on 3D films, many are 'faking it' by converting 2D movies

into 3D post-production. Worse, they're not upfront in their

advertising, and many people feel ripped off after paying

extra fees for the '3D Experience.'" - Philip Dhingra

(realorfake3d)

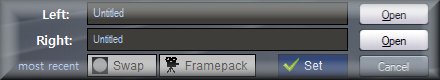

When shooting a real 3-dimensional movie, two camera lenses are

paired to

capture views of the same scene from slightly

different angles (positioned about six and a half centimeters apart). The difference in viewpoint between the left and right

lens is known as parallax. BinaryVision

brain wave-patterned algorithms

merge the left and right views of two paired

inputs to render 3D videos that can be watched without

special glasses (autostereoscopy).

![]() Create 3D videos without a

camera.

Create 3D videos without a

camera.

![]() Watch 3D videos

without special glasses.

Watch 3D videos

without special glasses.

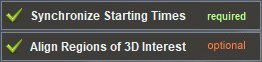

BinaryVision software can also convert between conventional 3D video framepacks. It supports industry-standard display protocols including side-by-side, half-width, anaglyph and more.

Above are 3D scenes from: "The Legend of Hercules," (2014). Below are 3D scenes from: "Jurassic World," (2015). Displayed here for educational purposes only.

|

|

|

|

|

|

Displayed here for educational purposes only.)

Introducing 3rdEyeVideo

The first 3D viewing devices or Stereoscopes were made in the 1800s by Sir Charles Wheatstone and David Brewster. The View-Master toy evolved in the 1950s and 60s. The Google Cardboard viewer stirred interest in Virtual Reality (VR) applications.

Stereographs (also known as wigglegrams, piku-piku, wiggle 3-D, wobble 3-D,

and

wiggle stereoscopy) are still images that simulate a 3D effect by displaying two views

of a scene for the left and right eyes. Wigglegrams

are left and right single image pairs alternating in a short

animated loop:

BinaryVision extends 3D filmmaking with innovative GPU-accelerated

3rdEyeVideos (also known as

wigglevids) based on brain wave frequencies. By intermixing input streams, its algorithms can render

full motion videos

rather than still images:

|

3D scenes from: "Avatar: The Way of Water," (2022). Displayed here for educational purposes only. |

"Stereographs on Steroids"

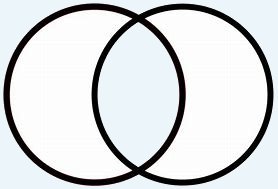

The 3D

convergence area (stereopsis, or stereoscopic sweet spot) is usually

within the two-lens overlapping field of focus. An intersecting ellipse

(vesica piscis) allocates binocular vision (%) and appears three-dimensional with little or no rocking effect or wobble inside the

convergence region (the subject's face in this example).

The 3D

convergence area (stereopsis, or stereoscopic sweet spot) is usually

within the two-lens overlapping field of focus. An intersecting ellipse

(vesica piscis) allocates binocular vision (%) and appears three-dimensional with little or no rocking effect or wobble inside the

convergence region (the subject's face in this example).